Introduction

Responsible AI, often called RAI, is becoming essential for organizations that want to grow steadily and build real trust. When companies invest in RAI, they position themselves to be more competitive. Around 46% of executives now see responsible AI as a top objective for gaining an edge over rivals. RAI operates at the intersection of ethical principles, technical soundness, and genuine customer value, helping businesses avoid the pitfalls that come with rushed or unchecked AI adoption.

Responsible AI adoption in the Agentic Era introduces a new wave of opportunities and risks that require thoughtful navigation. This era is marked by autonomous AI systems capable of making rapid, complex decisions with minimal human intervention. The push for responsible AI is not just about building smarter machines; it is about embedding ethical principles like fairness, transparency, and inclusivity from day one.

Organizations are actively developing clear accountability frameworks so that no single person or department bears the burden alone when critical decisions are made by AI agents. What stands out is that responsible AI is seen not as a checklist, but as a continuous journey where every employee, partner, and customer is part of the process.

One of the strongest benefits is that RAI goes beyond legal compliance; it builds trust with customers and partners by making AI decisions transparent and fair. This not only improves reputation but also leads to higher customer retention rates and stronger engagement, both crucial ingredients for growth in any sector. RAI also acts as a safeguard, reducing operational and reputational risks by keeping systems accountable and secure. This resilience allows organizations to innovate confidently, knowing that they can move quickly without risking the company’s integrity or public trust.

Key Insights

AI Incidents and Business Impact

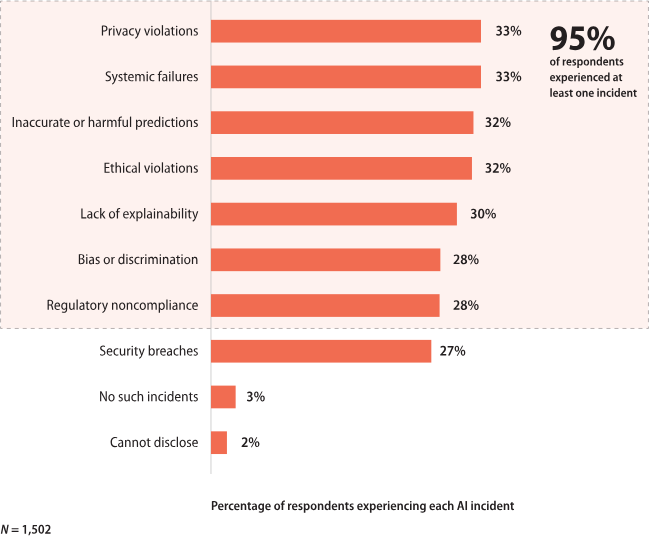

- 95% of executives have already experienced at least one problematic incident in their enterprise AI usage.

- 72% of those who faced incidents rated the impact as moderately severe or worse.

- In 77% of cases, the damage came as direct financial loss, yet executives considered reputational damage more threatening to long-term business sustainability.

Responsible AI (RAI) and Regulations

- Executives widely recognize that Responsible AI (RAI) practices drive business growth.

- A majority welcome new AI regulations, as they believe regulations will bring clarity, confidence, and trust, both internally and for customers.

- This reflects a shift from AI being viewed solely as a tech capability to being seen as a governance and trust enabler.

Team Size vs. Success Rate

- Larger RAI teams allow companies to run more AI initiatives simultaneously, but the success rate declines.

- Success rates fell from 24% to 21% as teams scaled, suggesting that complexity and coordination challenges outweigh the benefits of larger teams.

Maturity and RAI Leadership Gap

- Infosys’ RAISE BAR framework (trust, risk mitigation, governance, sustainability) revealed very few leaders.

- Less than 2% of companies meet the highest RAI standards.

- 15% are followers, but the majority are still lagging in responsible AI adoption.

(Source – Infosys)

Transparency is at the heart of responsible agentic AI. The current generation of AI systems makes thousands of decisions per minute, so ensuring that each step is explainable and traceable is essential for building trust. This means implementing models that provide confidence scores and decision trails while maintaining detailed documentation. Stakeholder education also plays a big role.

Companies are offering interactive guides and dashboards to help users understand how these AI agents reach decisions, which fosters a sense of collaboration between technology and its users. Privacy and data security are another crucial aspect in this landscape. Agentic AI often relies on massive datasets, which heightens concerns about personal privacy and data misuse.

To address this, organizations are adopting robust data governance measures like encryption and anonymization, and conducting routine audits to ensure compliance with global standards. When data breaches occur, companies know the stakes are high – not just financial losses but loss of user trust – and thus invest heavily in preventive maintenance and rapid response protocols.

AI-Related Issues in Enterprises

(Source – Infosys)

- 95% of respondents faced at least one AI incident.

- 33% experienced privacy violations.

- 33% reported systemic failures.

- 32% encountered inaccurate or harmful predictions.

- 32% faced ethical violations.

- 30% struggled with lack of explainability.

- 28% dealt with bias or discrimination.

- 28% had regulatory noncompliance issues.

- 27% reported security breaches.

- Only 3% saw no AI-related incidents.

- 2% could not disclose their experiences.

Agentic AI Statistics

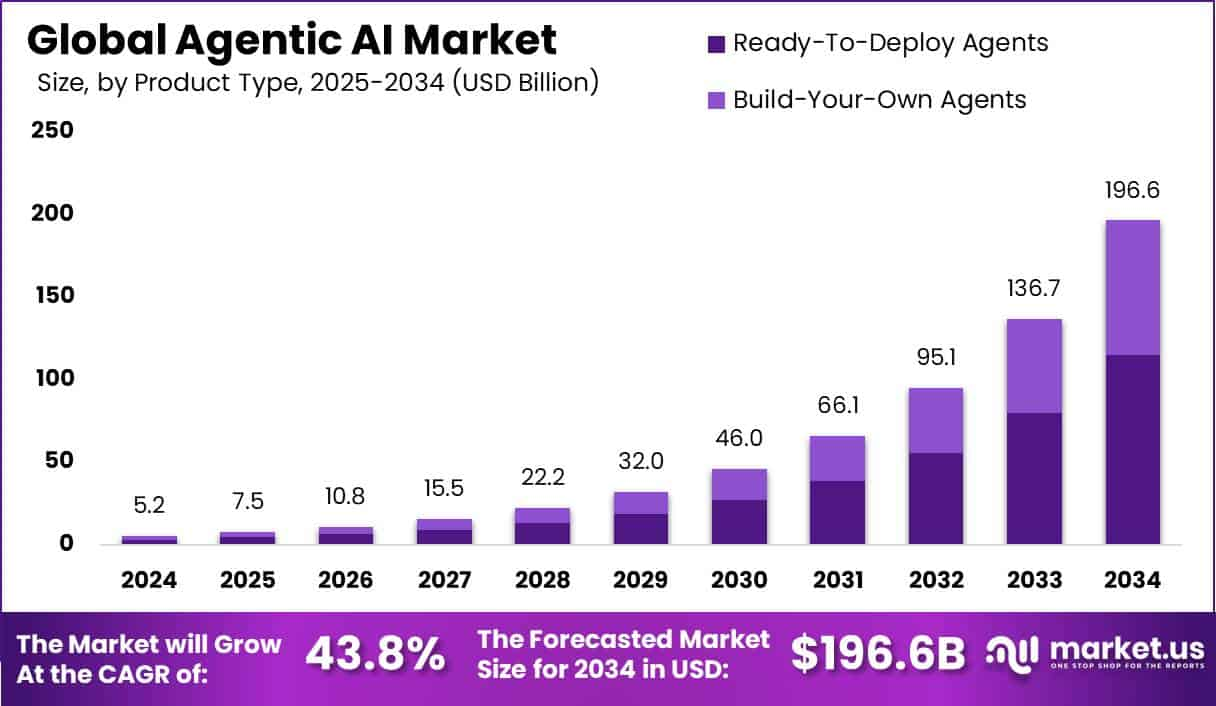

The global Agentic AI Market is set for rapid growth, expanding from USD 5.2 billion in 2024 to nearly USD 196.6 billion by 2034, at an impressive CAGR of 43.8% during 2025 to 2034. This surge reflects the rising use of autonomous AI systems that can plan, make decisions, and act independently across industries such as finance, healthcare, and enterprise automation.

In 2024, North America led the market with over 38% share, generating about USD 1.97 billion in revenue. Within the region, the U.S. accounted for USD 1.58 billion, supported by early adoption of advanced AI applications, strong investment activity, and the presence of leading technology players. With a projected CAGR of 43.6%, the U.S. market is expected to remain a central driver of agentic AI innovation and deployment over the next decade.

- In 2024, Ready-To-Deploy Agents led the Agentic AI market with 58.5% share.

- The Productivity & Personal Assistant segment captured 28.2% share in 2024.

- The Multi-Agent segment dominated with 66.4% share in 2024.

- The Enterprise segment held 62.7% share in 2024, showing strong business adoption.

- The U.S. Agentic AI market was valued at USD 1.58 billion in 2024, growing at a 43.6% CAGR.

- In 2023, North America led globally, capturing 38% share of the Agentic AI market.

- By 2028, 33% of enterprise applications are expected to feature Agentic AI, up from less than 1% in 2024.

- According to OECD, 90% of constituents are ready for AI agents in public service.

Sources:

- https://www.infosys.com/iki/research/responsible-enterprise-ai-agentic.html

- https://www.ibm.com/think/insights/responsible-ai-is-a-competitive-advantagehttps://toxsl.com/blog/541/how-responsible-ai-can-boost-your-business

- https://www.vktr.com/ai-ethics-law-risk/why-responsible-ai-will-define-the-next-decade-of-enterprise-success/

- https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/responsible-ai-a-business-imperative-for-telcos

- https://www.techpolicy.press/responsible-ai-as-a-business-necessity-three-forces-driving-market-adoption/