Arm, the British semiconductor design giant, has unveiled its next-generation chip platform called Lumex, specifically engineered to enhance artificial intelligence capabilities on mobile devices like smartphones and wearables. This new platform is designed to deliver significantly faster and more efficient AI processing directly on devices without relying on cloud connections, a crucial step toward private, real-time, and personalized AI experiences.

Key Features and Technological Advances

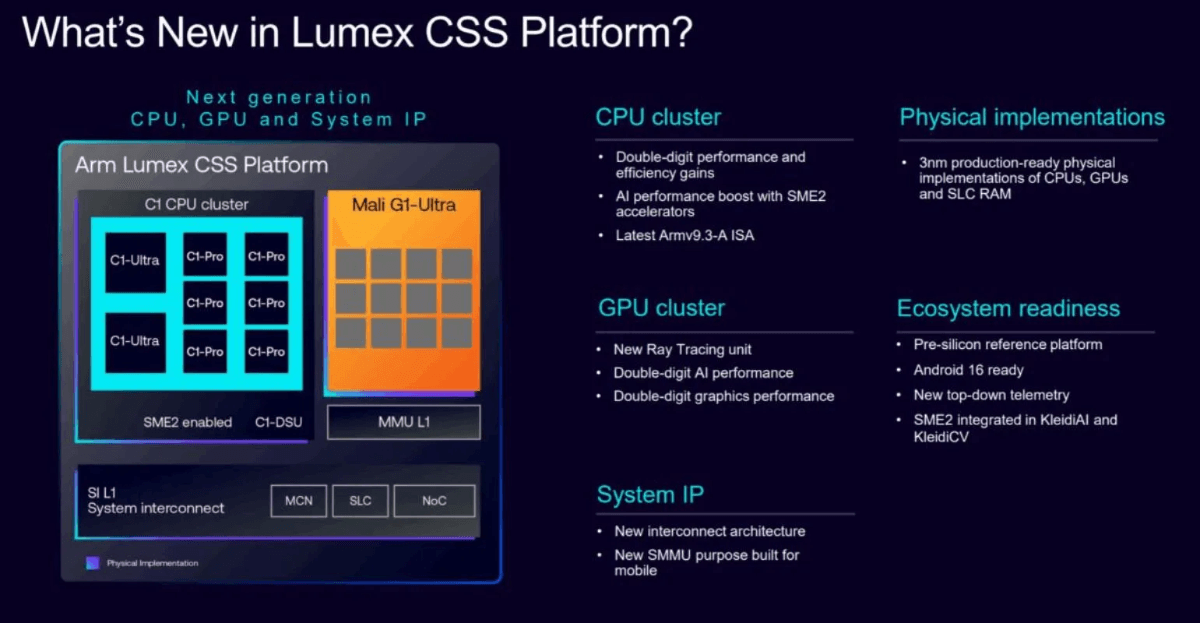

Lumex introduces advanced CPU and GPU architectures tailored for AI workloads on mobile platforms. The centerpiece is the Armv9.3 C1 CPU cluster, which integrates new Scalable Matrix Extension version 2 (SME2) units. These SME2 units are built for accelerating AI tasks and provide up to five times better AI performance and three times greater energy efficiency compared to Arm’s previous generation chips.

The Lumex lineup features four variations of CPUs, aimed at covering a broad range of device needs:

- C1-Ultra: The flagship core optimized for peak AI performance, suitable for demanding tasks like large AI model inference and advanced computational photography.

- C1-Premium: Offers flagship-level performance but with better area efficiency, aimed at sub-flagship devices and multitasking workloads.

- C1-Pro: Focuses on sustained efficiency, ideal for streaming inference and video playback.

- C1-Nano: A highly efficient CPU variant designed for ultra-low power use cases such as wearables or small form-factor devices.

The platform also integrates the Mali G1 Ultra GPU to accelerate graphics and AI workloads further, bringing desktop-level AI experiences to mobile and wearable devices. Arm’s architecture supports clock speeds above 4 GHz, thanks to a 3nm manufacturing process, ensuring Lumex chips can deliver both power and efficiency for next-generation AI tasks.

Lumex chips deliver significant AI performance improvements across the board:

- Up to 5 times faster AI performance compared to Arm’s previous generation chips.

- 4.7 times lower latency in speech-based AI workloads, essential for real-time voice assistants.

- 2.8 times faster audio generation, improving media and communication apps.

- 33% faster app launch speeds noted in typical smartphone use.

- Up to 40% reduction in response time for large language model (LLM) interactions, demonstrated with partners Alipay and vivo.

Market and User Impact

Lumex aims to transform everyday AI experiences on mobile devices. With its AI power on-device, users can benefit from instant voice processing, real-time translation, improved camera capabilities, and faster app launches without sacrificing battery life or privacy. In fact, tests have shown that apps can launch up to 33% faster, voice-based AI workloads exhibit nearly five times better performance, and cameras can denoise video streams at 120 frames per second in full HD.

This leap in on-device AI performance directly addresses user demands for private and responsive AI services that do not require constant cloud access. It is especially critical in markets where connectivity may be limited or where privacy concerns are paramount.

Arm’s Strategic Shift Toward AI Chip Development

Arm’s Lumex launch marks a strategic pivot to becoming more deeply involved in AI chip development, moving beyond its traditional role as a chip architecture licenser. The company recently hired Rami Sinno, a former AI chip director at Amazon credited with leading the development of Amazon’s Trainium and Inferentia AI processors, signaling Arm’s ambitions to compete more directly in the AI hardware market.

This shift enables Arm to offer full-stack AI chips optimized from silicon to software, a critical advantage as demand for AI acceleration grows. Analysts project the global AI hardware market will surpass $400 billion by 2030, and Arm’s efficient architectures position it well to capture a large share of growth, especially in energy-sensitive mobile and edge deployments.

(Image Credit – ARM Newsroom)

Competitive Position and Future Outlook

By focusing on a platform that harmonizes performance, energy efficiency, and privacy, Arm Lumex chips stand to challenge incumbent AI hardware providers in the mobile space. Lumex’s scalable multi-tier CPU setup allows licensees like smartphone OEMs to tailor chips precisely for flagship devices down to smaller wearables.

Arm’s introduction of the Lumex platform also reflects broader trends toward integrating AI deeply into consumer and IoT devices, fulfilling expectations for always-available, real-time, and private AI experiences at lower power costs. Industry watchers expect Lumex-powered devices to appear in flagship smartphones and wearables by late 2025 or early 2026, setting a new standard for on-device AI.

In conclusion, Arm’s Lumex chips represent a significant leap forward in mobile AI technology, combining architectural innovation with strategic market positioning. This launch not only boosts Arm’s competitive edge, but also promises to enrich user experiences across a spectrum of connected devices by delivering faster, smarter, and more private AI capabilities directly where they are needed most.